Deep Learning is a subfield of Machine Learning that involves the use of neural networks, a process similar to how the neurons in the human brain function.

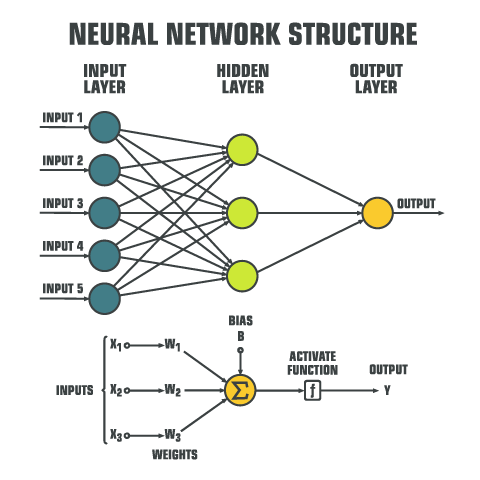

Neural networks are composed of interconnected artificial neurons. Some neurons are input neurons that provide weighted inputs to the next layer, called the hidden layer, which has neurons that are functions. Some neurons are output neurons. The ‘deep’ refers to the depth of these neuron layers

Types of Neural Networks

A feedforward neural network is one that has all inputs only moving forward i.e. no loops.

Some networks have neurons that link back to the input/hidden layer neurons, resulting a feedback loop. This is known as back propagation.

Perceptron

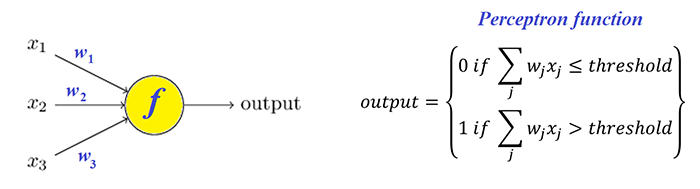

Neurons used in the hidden layer of a neural network are named for the activation function that they use. The artificial neuron known as a perceptron accepts inputs, and outputs yes/no or 0/1, making it a binary classifiers

After training, a perceptron realises that inputs can either fire or not fire the neuron. That means the data set is classified as linearly separable. So the line dividing the two, whether is the decision line which separates the labelling of the neural network.

Sigmoid Neurons

Sigmoid neurons are similar to perceptrons, in that they use inputs with discrete weighted importance, and a bias value. However, they do not give a binary output as perceptrons do (using the step function). Instead, the output from a sigmoid neuron is a real number between 0 and 1.

They use the sigmoid function () to evaluate the output.

Training Neural Networks

A neural network is ‘trained’ by being fed a dataset, which is usually sampled into smaller subsets called folds. Some folds are used for training, and some are used to test.

Similar to Simulated Annealing, the neural network makes a guess, and using a loss function, finds out how close it’s guess was to the actual guess. Then it makes changes such that the global loss is at a minimum. A common loss function is the mean square error, also known as the variance.

As a neural network learns at a learning rate defined by the symbol (Greek letter eta). By many iterations (called epochs) over the training data, it slowly adjusts the different weights so that they can map to the actual value correctly.

Advantages & Disadvantages

Pros:

- Makes use of all the training data, no data is wasted. Therefore more accurate, for a well-labelled dataset.

- Accuracy increases as more data is fed

- Useful when no known algorithms exist for solving a problem. The neural network can just be fed the inputs, and then the outputs it produces can be verified.

Cons:

- Requires significantly more data than SVMs

- Much slower than SVMs

Resources

3Blue1Brown’s video on neural networks

How to train a network